Deploy to DHS

Data Hub Central Community Edition (DHCCE) is platform neutral and can be used with on-prem and cloud deployed Data Hubs as well as with the MarkLogic Data Hub Service (DHS).

DHCCE (2.0.5 and greater) requires Data Hub Framework 5.2.3 (DHF) to be used with the current MarkLogic Data Hub Service on Azure (DHS).

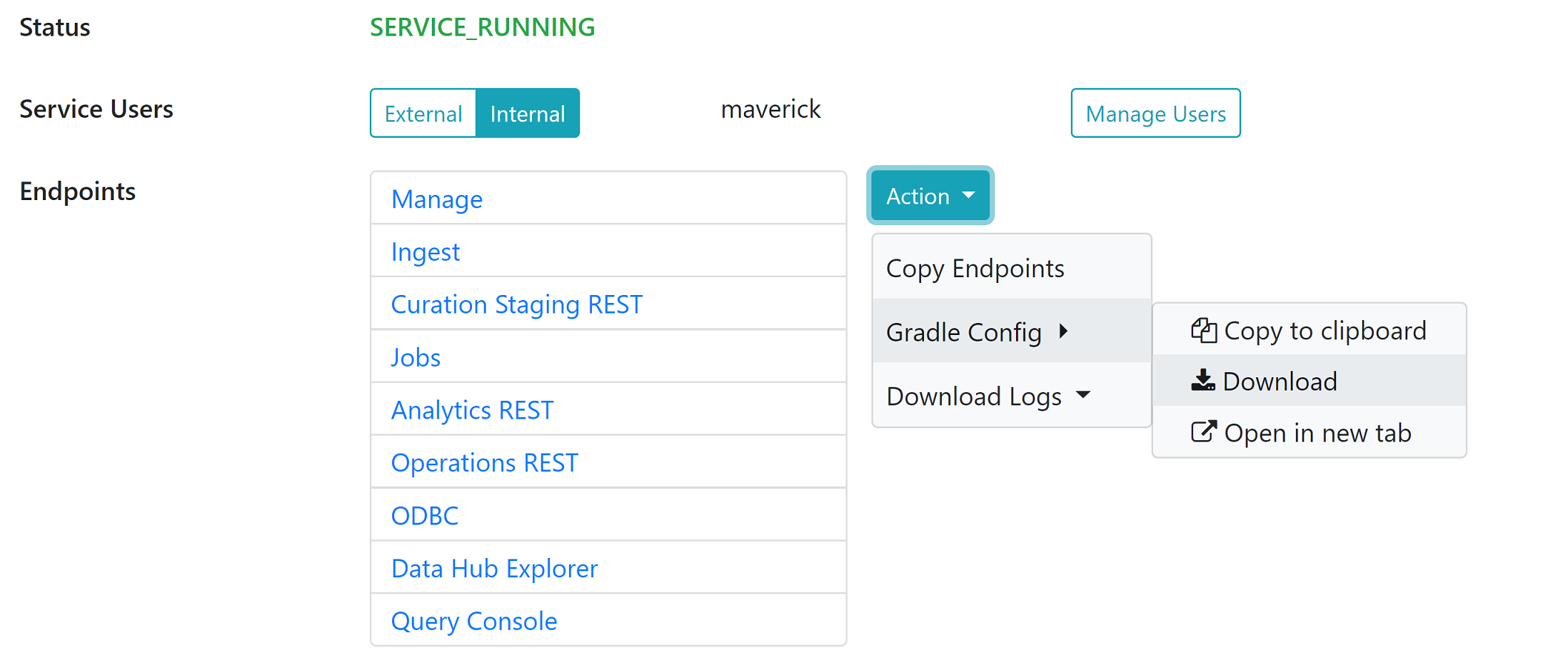

To use DHCCE with DHS, start by downloading the gradle config file for your DHS instance. After downloading, remember to update the username and password with your DHS credentials.

Save this file in your data hub root directory as “gradle-dhs.properties”.

Use gradle deploy your data hub to DHS.

gradlew hubDeploy -PenvironmentName=dhs -i

Stop DHCCE, and restart it using the new properties file so that it points to your DHS instance.

java -DdhfEnv=dhs -jar hub-central-community-2.0.5.jar

Login to DHCCE and revisit the Admin pane to confirm your using DHCCE with your Data Hub Instance.

You can load your data and run your flows using gradle of course, and then use DHCCE Explore to explore your data and to curate entities that require mastering. You can also load data and run your flows from DHCCE.

To run your flows in DHCCE, navigate to the Admin pane and click the “Run Flows” button to run your flows. Data from any ingest steps will be loaded from the location the ingest step points to where DHCCE is currently running and transfer that data to the DHS Data Hub for processing. Any flows defined will all be run then as well.

Using either method above you can then explore and master your data in DHS using DHCCE.